Explore Workflows

View already parsed workflows here or click here to add your own

| Graph | Name | Retrieved From | View |

|---|---|---|---|

|

|

gathered exome alignment and somatic variant detection for cle purpose

|

Path: definitions/pipelines/somatic_exome_cle_gathered.cwl Branch/Commit ID: 40097e1ed094c5b42b68f3db2ff2cbe78c182479 |

|

|

|

PGAP Pipeline, simple user input, PGAPX-134

PGAP pipeline for external usage, powered via containers, simple user input: (FASTA + yaml only, no template) |

Path: pgap.cwl Branch/Commit ID: 83ef15482f405bc3d24f88cbf405ceffea9b3023 |

|

|

|

Single-cell Format Transform

Single-cell Format Transform Transforms single-cell sequencing data formats into Cell Ranger like output |

Path: workflows/sc-format-transform.cwl Branch/Commit ID: cc6fa135d04737fdde3b4414d6e214cf8c812f6e |

|

|

|

heatmap-prepare.cwl

Workflow runs homer-make-tag-directory.cwl tool using scatter for the following inputs - bam_file - fragment_size - total_reads `dotproduct` is used as a `scatterMethod`, so one element will be taken from each array to construct each job: 1) bam_file[0] fragment_size[0] total_reads[0] 2) bam_file[1] fragment_size[1] total_reads[1] ... N) bam_file[N] fragment_size[N] total_reads[N] `bam_file`, `fragment_size` and `total_reads` arrays should have the identical order. |

Path: tools/heatmap-prepare.cwl Branch/Commit ID: cc6fa135d04737fdde3b4414d6e214cf8c812f6e |

|

|

|

QuantSeq 3' mRNA-Seq single-read

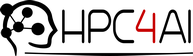

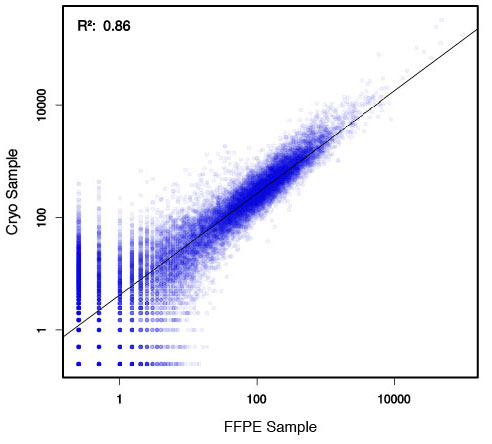

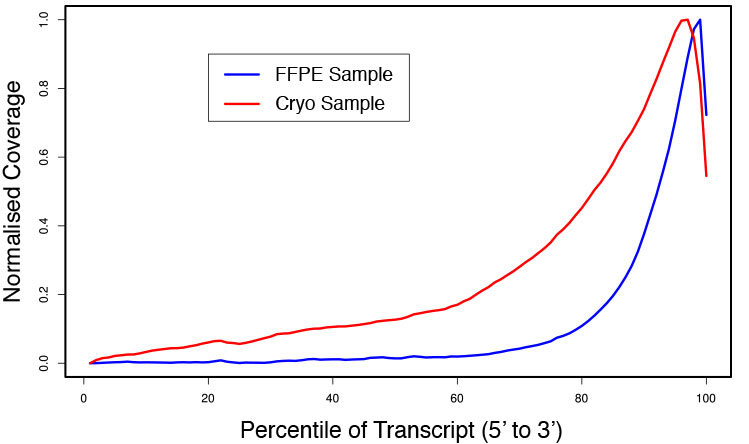

### Pipeline for Lexogen's QuantSeq 3' mRNA-Seq Library Prep Kit FWD for Illumina [Lexogen original documentation](https://www.lexogen.com/quantseq-3mrna-sequencing/) * Cost-saving and streamlined globin mRNA depletion during QuantSeq library preparation * Genome-wide analysis of gene expression * Cost-efficient alternative to microarrays and standard RNA-Seq * Down to 100 pg total RNA input * Applicable for low quality and FFPE samples * Single-read sequencing of up to 9,216 samples/lane * Dual indexing and Unique Molecular Identifiers (UMIs) are available ### QuantSeq 3’ mRNA-Seq Library Prep Kit FWD for Illumina The QuantSeq FWD Kit is a library preparation protocol designed to generate Illumina compatible libraries of sequences close to the 3’ end of polyadenylated RNA. QuantSeq FWD contains the Illumina Read 1 linker sequence in the second strand synthesis primer, hence NGS reads are generated towards the poly(A) tail, directly reflecting the mRNA sequence (see workflow). This version is the recommended standard for gene expression analysis. Lexogen furthermore provides a high-throughput version with optional dual indexing (i5 and i7 indices) allowing up to 9,216 samples to be multiplexed in one lane. #### Analysis of Low Input and Low Quality Samples The required input amount of total RNA is as low as 100 pg. QuantSeq is suitable to reproducibly generate libraries from low quality RNA, including FFPE samples. See Fig.1 and 2 for a comparison of two different RNA qualities (FFPE and fresh frozen cryo-block) of the same sample.  Figure 1 | Correlation of gene counts of FFPE and cryo samples.  Figure 2 | Venn diagrams of genes detected by QuantSeq at a uniform read depth of 2.5 M reads in FFPE and cryo samples with 1, 5, and 10 reads/gene thresholds. #### Mapping of Transcript End Sites By using longer reads QuantSeq FWD allows to exactly pinpoint the 3’ end of poly(A) RNA (see Fig. 3) and therefore obtain accurate information about the 3’ UTR.  Figure 3 | QuantSeq read coverage versus normalized transcript length of NGS libraries derived from FFPE-RNA (blue) and cryo-preserved RNA (red). ### Current workflow should be used only with the single-end RNA-Seq data. It performs the following steps: 1. Separates UMIes and trims adapters from input FASTQ file 2. Uses ```STAR``` to align reads from input FASTQ file according to the predefined reference indices; generates unsorted BAM file and alignment statistics file 3. Uses ```fastx_quality_stats``` to analyze input FASTQ file and generates quality statistics file 4. Uses ```samtools sort``` and generates coordinate sorted BAM(+BAI) file pair from the unsorted BAM file obtained on the step 2 (after running STAR) 5. Uses ```umi_tools dedup``` and generates final filtered sorted BAM(+BAI) file pair 6. Generates BigWig file on the base of sorted BAM file 7. Maps input FASTQ file to predefined rRNA reference indices using ```bowtie``` to define the level of rRNA contamination; exports resulted statistics to file 8. Calculates isoform expression level for the sorted BAM file and GTF/TAB annotation file using GEEP reads-counting utility; exports results to file |

Path: workflows/trim-quantseq-mrnaseq-se.cwl Branch/Commit ID: c6bfa0de917efb536dd385624fc7702e6748e61d |

|

|

|

count-lines2-wf.cwl

|

Path: cwltool/schemas/v1.0/v1.0/count-lines2-wf.cwl Branch/Commit ID: 0e98de8f692bb7b9626ed44af835051750ac20cd |

|

|

|

etl_http.cwl

|

Path: workflows/dnaseq/etl_http.cwl Branch/Commit ID: 6698fbd8d0a6155d2008d4e89ab1110fbef9ebbf |

|

|

|

Cellranger reanalyze - reruns secondary analysis performed on the feature-barcode matrix

Devel version of Single-Cell Cell Ranger Reanalyze ================================================== Workflow calls \"cellranger aggr\" command to rerun secondary analysis performed on the feature-barcode matrix (dimensionality reduction, clustering and visualization) using different parameter settings. As an input we use filtered feature-barcode matrices in HDF5 format from cellranger count or aggr experiments. Note, we don't pass aggregation_metadata from the upstream cellranger aggr step. Need to address this issue when needed. |

Path: workflows/cellranger-reanalyze.cwl Branch/Commit ID: 64f7fe4438898218fd83133efa25251078f5b27e |

|

|

|

default-dir5.cwl

|

Path: tests/wf/default-dir5.cwl Branch/Commit ID: 819c81af5449ec912bbbbead042ad66b8d3fd8d4 |

|

|

|

wgs alignment with qc

|

Path: definitions/pipelines/wgs_alignment.cwl Branch/Commit ID: 1437aed13d240fd624f78df2c7efb096c5079d73 |